Definition (classification context)

For classification tasks, the terms true positives, true negatives, false positives, and false negatives (see also Type I and type II errors) compare the results of the classifier under test with trusted external judgments. The terms positive and negative refer to the classifier's prediction (sometimes known as the observation), and the terms true and false refer to whether that prediction corresponds to the external judgment (sometimes known as the expectation). This is illustrated by the table below:

| actual class

(expectation) |

|---|

|

|---|

predicted class

(observation) | tp

(true positive)

Correct result | fp

(false positive)

Unexpected result |

|---|

fn

(false negative)

Missing result | tn

(true negative)

Correct absence of result |

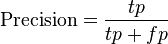

Precision and recall are then defined as:[4]

[通俗理解:预测出来100个正例,事实只有30个,precesion就是0.3]

[通俗理解:预测出来100个正例,事实只有30个,precesion就是0.3]

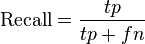

[通俗理解:总共200个正例,事实只有40个,recall就是0.2]

[通俗理解:总共200个正例,事实只有40个,recall就是0.2]

Recall in this context is also referred to as the True Positive Rate or Sensitivity, and precision is also referred to as Positive predictive value (PPV); other related measures used in classification include True Negative Rate and Accuracy:.[4] True Negative Rate is also called Specificity.

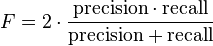

A measure that combines precision and recall is the harmonic mean of precision and recall, the traditional F-measure or balanced F-score:

Reference

http://en.wikipedia.org/wiki/Precision_and_recall

Compressed labeling on distilled labelsets for multi-label learning公式85和86,这是对应multi-label的precision和 recall。(例如第i个样本的实际标记是 1 0 0;预测标记是1 1 0;该样本对应的值是1/2,与单label的意义很好对应,物理意义很好理解)

)