Soft-Edged Shadows

by Anirudh.S Shastry

ADVERTISEMENT

<a href="http://ad.doubleclick.net/click%3Bh=v8/34be/3/0/%2a/o%3B54222021%3B0-0%3B0%3B14583084%3B4307-300/250%3B18768897/18786792/1%3B%3B%7Esscs%3D%3fhttp://usa.autodesk.com/adsk/servlet/item?id=7674744&amp;siteID=123112&amp;CMP=ILC-ME_O5" target="_blank">

<img src="http://m1.2mdn.net/1001314/flip_300.gif" border="0" />

</a>

|

Introduction

Originally, dynamic shadowing techniques were possible only in a limited way. But with the advent of powerful programmable graphicshardware, dynamic shadow techniques have nearly completely replaced static techniques like light mapping and semi-dynamic techniques like projected shadows. Two popular dynamic shadowing techniques are shadow volumes and shadow mapping.

A closer look

The shadow volumes technique is a geometry based technique that requires the extrusion of the geometry in the direction of the light to generate a closed volume. Then, via ray casting, the shadowed portions of the scene can be determined (usually the stencil buffer is used to simulate ray-casting). This technique is pixel-accurate and doesn't suffer from any aliasing problems, but as with any technique, it suffers from its share of disadvantages. Two major problems with this technique are that it is heavily geometry dependent and fill-rate intensive. Because of this, shadow mapping is slowly becoming more popular.

Shadow mapping on the other hand is an image space technique that involves rendering the scene depth from the light's point of view and using this depth information to determine which portions of the scene in shadow. Though this technique has several advantages, it suffers from aliasing artifacts and z-fighting. But there are solutions to this and since the advantages outweigh the disadvantages, this will be the technique of my choice in this article.

Soft shadows

Hard shadows destroy the realism of a scene. Hence, we need to fake soft shadows in order to improve the visual quality of the scene. A lot of over-zealous PHD students have come up with papers describing soft shadowing techniques, but in reality, most of these techniques are not viable in real-time, at least when considering complex scenes. Until we have hardware that can overcome some of the limitations of these techniques, we will need to stick to more down-to-earth methods.

In this article, I present an image space method to generate soft-edged shadows using shadow maps. This method doesn't generate perfectly soft shadows (no umbra-penumbra). But it not only solves the aliasing problems of shadow mapping, it improves the visual quality by achieving aesthetically pleasing soft edged shadows.

So how does it work?

First, we generate the shadow map as usual by rendering the scene depth from the light's point of view into a floating point buffer. Then, instead of rendering the scene with shadows, we render the shadowed regions into a screen-sized buffer. Now, we can blur this using a bloom filter and project it back onto the scene in screen space. Sounds simple right?

In this article, we only deal with spot lights, but this technique can easily be extended to handle point lights as well.

Here are the steps:

- Generate the shadow map as usual by writing the scene depth into a floating point buffer.

- Render the shadowed portions of the scene after depth comparison into fixed point texture, without any lighting.

- Blur the above buffer using a bloom filter (though we use a separable Gaussian filter in this article, any filter can be used).

- Project the blurred buffer onto the scene in screen space to get cool soft-edged shadows, along with full lighting.

Step 1: Rendering the shadow map

First, we need to create a texture that can hold the scene depth. Since we need to use this as a render target, we will also need to create a surface that holds the texture's surface data. The texture must be a floating point one because of the large range of depth values. The R32F format has sufficient precision and so we use it. Here's the codelet that is used to create the texture.

if( FAILED( g_pd3dDevice->CreateTexture( SHADOW_MAP_SIZE, SHADOW_MAP_SIZE, 1,

D3DUSAGE_RENDERTARGET, D3DFMT_R32F,

D3DPOOL_DEFAULT, &g_pShadowMap,

NULL ) ) )

{

MessageBox( g_hWnd, "Unable to create shadow map!",

"Error", MB_OK | MB_ICONERROR );

return E_FAIL;

}

g_pShadowMap->GetSurfaceLevel( 0, &g_pShadowSurf );

Now, to generate the shadow map, we need to render the scene's depth to the shadow map. To do this, we must render the scene with the light's world-view-projection matrix. Here's how we build that matrix.

D3DXMatrixLookAtLH( &matView, &vLightPos, &vLightAim, &g_vUp );

D3DXMatrixPerspectiveFovLH( &matProj, D3DXToRadian(30.0f), 1.0f, 1.0f, 1024.0f );

matLightViewProj = matWorld * matView * matProj;

Here are vertex and pixel shaders for rendering the scene depth.

struct VSOUTPUT_SHADOW

{

float4 vPosition : POSITION;

float fDepth : TEXCOORD0;

};

VSOUTPUT_SHADOW VS_Shadow( float4 inPosition : POSITION )

{

VSOUTPUT_SHADOW OUT = (VSOUTPUT_SHADOW)0;

OUT.vPosition = mul( inPosition, g_matLightViewProj );

OUT.fDepth = OUT.vPosition.z;

return OUT;

}

Here, we multiply the position by the light's world-view-projection matrix (g_matLightViewProj) and use the transformed position's z-value as the depth. In the pixel shader, we output the depth as the color.

float4 PS_Shadow( VSOUTPUT_SHADOW IN ) : COLOR0

{

return float4( IN.fDepth, IN.fDepth, IN.fDepth, 1.0f );

}

Voila! We have the shadow map. Below is a color coded version of the shadow map, dark blue indicates smaller depth values, whereas light blue indicates larger depth values.

Step 2: Rendering the shadowed scene into a buffer

Next, we need to render the shadowed portions of the scene to an offscreen buffer so that we can blur it and project it back onto the scene. To do that, we first render the shadowed portions of the scene into a screen-sized fixed point texture.

if( FAILED( g_pd3dDevice->CreateTexture( SCREEN_WIDTH, SCREEN_HEIGHT, 1,

D3DUSAGE_RENDERTARGET, D3DFMT_A8R8G8B8, D3DPOOL_DEFAULT, &g_pScreenMap, NULL ) ) )

{

MessageBox( g_hWnd, "Unable to create screen map!",

"Error", MB_OK | MB_ICONERROR );

return E_FAIL;

}

g_pScreenMap->GetSurfaceLevel( 0, & g_pScreenSurf );

To get the projective texture coordinates, we need a "texture" matrix that will map the position from projection space to texture space.

float fTexOffs = 0.5 + (0.5 / (float)SHADOW_MAP_SIZE);

D3DXMATRIX matTexAdj( 0.5f, 0.0f, 0.0f, 0.0f,

0.0f, -0.5f, 0.0f, 0.0f,

0.0f, 0.0f, 1.0f, 0.0f,

fTexOffs, fTexOffs, 0.0f, 1.0f );

matTexture = matLightViewProj * matTexAdj;

We get the shadow factor as usual by depth comparison, but instead of outputting the completely lit scene, we output only the shadow factor. Here are the vertex and pixel shaders that do the job.

struct VSOUTPUT_UNLIT

{

float4 vPosition : POSITION;

float4 vTexCoord : TEXCOORD0;

float fDepth : TEXCOORD1;

};

VSOUTPUT_UNLIT VS_Unlit( float4 inPosition : POSITION )

{

VSOUTPUT_UNLIT OUT = (VSOUTPUT_UNLIT)0;

OUT.vPosition = mul( inPosition, g_matWorldViewProj );

OUT.vTexCoord = mul( inPosition, g_matTexture );

OUT.fDepth = mul( inPosition, g_matLightViewProj ).z;

return OUT;

}

We use percentage closer filtering (PCF) to smoothen out the jagged edges. To "do" PCF, we simply sample the 8 (we're using a 3x3 PCF kernel here) surrounding texels along with the center texel and take the average of all the depth comparisons.

float4 PS_Unlit( VSOUTPUT_UNLIT IN ) : COLOR0

{

float4 vTexCoords[9];

float fTexelSize = 1.0f / 1024.0f;

vTexCoords[0] = IN.vTexCoord;

vTexCoords[1] = IN.vTexCoord + float4( -fTexelSize, 0.0f, 0.0f, 0.0f );

vTexCoords[2] = IN.vTexCoord + float4( fTexelSize, 0.0f, 0.0f, 0.0f );

vTexCoords[3] = IN.vTexCoord + float4( 0.0f, -fTexelSize, 0.0f, 0.0f );

vTexCoords[6] = IN.vTexCoord + float4( 0.0f, fTexelSize, 0.0f, 0.0f );

vTexCoords[4] = IN.vTexCoord + float4( -fTexelSize, -fTexelSize, 0.0f, 0.0f );

vTexCoords[5] = IN.vTexCoord + float4( fTexelSize, -fTexelSize, 0.0f, 0.0f );

vTexCoords[7] = IN.vTexCoord + float4( -fTexelSize, fTexelSize, 0.0f, 0.0f );

vTexCoords[8] = IN.vTexCoord + float4( fTexelSize, fTexelSize, 0.0f, 0.0f );

float fShadowTerms[9];

float fShadowTerm = 0.0f;

for( int i = 0; i < 9; i++ )

{

float A = tex2Dproj( ShadowSampler, vTexCoords[i] ).r;

float B = (IN.fDepth ?0.1f);

fShadowTerms[i] = A < B ? 0.0f : 1.0f;

fShadowTerm += fShadowTerms[i];

}

fShadowTerm /= 9.0f;

return fShadowTerm;

}

The screen buffer is good to go! Now all we need to do is blur this and project it back onto the scene in screen space.

Step 3: Blurring the screen buffer

We use a seperable gaussian filter to blur the screen buffer, but one could also use a Poisson filter. The render targets this time are A8R8G8B8 textures accompanied by corresponding surfaces. We need 2 render targets, one for the horizontal pass and the other for the vertical pass.

for( int i = 0; i < 2; i++ )

{

if( FAILED( g_pd3dDevice->CreateTexture( SCREEN_WIDTH, SCREEN_HEIGHT, 1,

D3DUSAGE_RENDERTARGET,

D3DFMT_A8R8G8B8, D3DPOOL_DEFAULT,

&g_pBlurMap[i], NULL ) ) )

{

MessageBox( g_hWnd, "Unable to create blur map!",

"Error", MB_OK | MB_ICONERROR );

return E_FAIL;

}

g_pBlurMap[i]->GetSurfaceLevel( 0, & g_pBlurSurf[i] );

}

We generate 15 Gaussian offsets and their corresponding weights using the following functions.

float GetGaussianDistribution( float x, float y, float rho )

{

float g = 1.0f / sqrt( 2.0f * 3.141592654f * rho * rho );

return g * exp( -(x * x + y * y) / (2 * rho * rho) );

}

void GetGaussianOffsets( bool bHorizontal, D3DXVECTOR2 vViewportTexelSize,

D3DXVECTOR2* vSampleOffsets, float* fSampleWeights )

{

fSampleWeights[0] = 1.0f * GetGaussianDistribution( 0, 0, 2.0f );

vSampleOffsets[0] = D3DXVECTOR2( 0.0f, 0.0f );

if( bHorizontal )

{

for( int i = 1; i < 15; i += 2 )

{

vSampleOffsets[i + 0] = D3DXVECTOR2( i * vViewportTexelSize.x, 0.0f );

vSampleOffsets[i + 1] = D3DXVECTOR2( -i * vViewportTexelSize.x, 0.0f );

fSampleWeights[i + 0] = 2.0f * GetGaussianDistribution( float(i + 0), 0.0f, 3.0f );

fSampleWeights[i + 1] = 2.0f * GetGaussianDistribution( float(i + 1), 0.0f, 3.0f );

}

}

else

{

for( int i = 1; i < 15; i += 2 )

{

vSampleOffsets[i + 0] = D3DXVECTOR2( 0.0f, i * vViewportTexelSize.y );

vSampleOffsets[i + 1] = D3DXVECTOR2( 0.0f, -i * vViewportTexelSize.y );

fSampleWeights[i + 0] = 2.0f * GetGaussianDistribution( 0.0f, float(i + 0), 3.0f );

fSampleWeights[i + 1] = 2.0f * GetGaussianDistribution( 0.0f, float(i + 1), 3.0f );

}

}

}

To blur the screen buffer, we set the blur map as the render target and render a screen sized quad with the following vertex and pixel shaders.

struct VSOUTPUT_BLUR

{

float4 vPosition : POSITION;

float2 vTexCoord : TEXCOORD0;

};

VSOUTPUT_BLUR VS_Blur( float4 inPosition : POSITION, float2 inTexCoord : TEXCOORD0 )

{

VSOUTPUT_BLUR OUT = (VSOUTPUT_BLUR)0;

OUT.vPosition = inPosition;

OUT.vTexCoord = inTexCoord;

return OUT;

}

float4 PS_BlurH( VSOUTPUT_BLUR IN ): COLOR0

{

float4 vAccum = float4( 0.0f, 0.0f, 0.0f, 0.0f );

for(int i = 0; i < 15; i++ )

{

vAccum += tex2D( ScreenSampler, IN.vTexCoord + g_vSampleOffsets[i] ) * g_fSampleWeights[i];

}

return vAccum;

}

float4 PS_BlurV( VSOUTPUT_BLUR IN ): COLOR0

{

float4 vAccum = float4( 0.0f, 0.0f, 0.0f, 0.0f );

for( int i = 0; i < 15; i++ )

{

vAccum += tex2D( BlurHSampler, IN.vTexCoord + g_vSampleOffsets[i] ) * g_fSampleWeights[i];

}

return vAccum;

}

There, the blur maps are ready. To increase the blurriness of the shadows, increase the texel sampling distance. The last step, of course, is to project the blurred map back onto the scene in screen space.

After first Gaussian pass)

After second Gaussian pass

Step 4: Rendering the shadowed scene

To project the blur map onto the scene, we render the scene as usual, but project the blur map using screen-space coordinates. We use the clip space position with some hard-coded math to generate the screen-space coordinates. The vertex and pixel shaders shown below render the scene with per-pixel lighting along with shadows.

struct VSOUTPUT_SCENE

{

float4 vPosition : POSITION;

float2 vTexCoord : TEXCOORD0;

float4 vProjCoord : TEXCOORD1;

float4 vScreenCoord : TEXCOORD2;

float3 vNormal : TEXCOORD3;

float3 vLightVec : TEXCOORD4;

float3 vEyeVec : TEXCOORD5;

};

VSOUTPUT_SCENE VS_Scene( float4 inPosition : POSITION, float3 inNormal : NORMAL,

float2 inTexCoord : TEXCOORD0 )

{

VSOUTPUT_SCENE OUT = (VSOUTPUT_SCENE)0;

OUT.vPosition = mul( inPosition, g_matWorldViewProj );

OUT.vTexCoord = inTexCoord;

OUT.vProjCoord = mul( inPosition, g_matTexture );

OUT.vScreenCoord.x = ( OUT.vPosition.x * 0.5 + OUT.vPosition.w * 0.5 );

OUT.vScreenCoord.y = ( OUT.vPosition.w * 0.5 - OUT.vPosition.y * 0.5 );

OUT.vScreenCoord.z = OUT.vPosition.w;

OUT.vScreenCoord.w = OUT.vPosition.w;

float4 vWorldPos = mul( inPosition, g_matWorld );

OUT.vNormal = mul( inNormal, g_matWorldIT );

OUT.vLightVec = g_vLightPos.xyz - vWorldPos.xyz;

OUT.vEyeVec = g_vEyePos.xyz - vWorldPos.xyz;

return OUT;

}

We add an additional spot term by projecting down a spot texture from the light. This not only simulates a spot lighting effect, it also cuts out parts of the scene outside the shadow map. The spot map is projected down using standard projective texturing.

float4 PS_Scene( VSOUTPUT_SCENE IN ) : COLOR0

{

IN.vNormal = normalize( IN.vNormal );

IN.vLightVec = normalize( IN.vLightVec );

IN.vEyeVec = normalize( IN.vEyeVec );

float4 vColor = tex2D( ColorSampler, IN.vTexCoord );

float ambient = 0.0f;

float diffuse = max( dot( IN.vNormal, IN.vLightVec ), 0 );

float specular = pow(max(dot( 2 * dot( IN.vNormal, IN.vLightVec ) * IN.vNormal

- IN.vLightVec, IN.vEyeVec ), 0 ), 8 );

if( diffuse == 0 ) specular = 0;

float fShadowTerm = tex2Dproj( BlurVSampler, IN.vScreenCoord );

float fSpotTerm = tex2Dproj( SpotSampler, IN.vProjCoord );

return (ambient * vColor) +

(diffuse * vColor * g_vLightColor * fShadowTerm * fSpotTerm) +

(specular * vColor * g_vLightColor.a * fShadowTerm * fSpotTerm);

}

That's it! We have soft edged shadows that look quite nice! The advantage of this technique is that it completely removes edge-aliasing artifacts that the shadow mapping technique suffers from. Another advantage is that one can generate soft shadows for multiple lights with a small memory overhead. When dealing with multiple lights, all you need is one shadow map per light, whereas the screen and blur buffers can be common to all the lights! Finally, this technique can be applied to both shadow maps and shadow volumes, so irrespective of the shadowing technique, you can generate soft-edged shadows with this method. One disadvantage is that this method is a wee bit fill-rate intensive due to the Gaussian filter. This can be minimized by using smaller blur buffers and slightly sacrificing the visual quality.

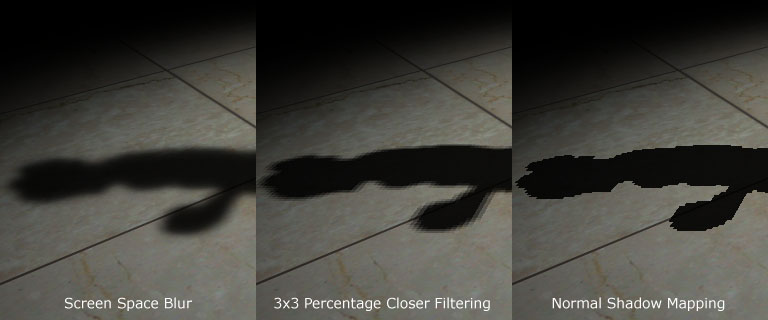

Here's a comparison between the approach mentioned here, 3x3 percentage closer filtering and normal shadow mapping.

Thank you for reading my article. I hope you liked it. If you have any doubts, questions or comments, please feel free to mail me at anidex@yahoo.com. Here's the source code.

References

- Hardware Shadow Mapping. Cass Everitt, Ashu Rege and Cem Cebenoyan.

- Hardware-accelerated Rendering of Antialiased Shadows with Shadow Maps. Stefan Brabec and Hans-Peter Seidel.

Discuss this article in the forums

Date this article was posted to GameDev.net: 1/18/2005

(Note that this date does not necessarily correspond to the date the article was written)

See Also:

Hardcore Game Programming

Shadows

------------------------------------------------------------------------------------------------------------------------------------------------------

------------------------------------------------------------------------------------------------------------------------------------------------------

介绍

...

1

近况

...

1

软阴影

...

2

那么它如何工作?

...

2

步骤一:渲染阴影映射图(

shadow map

)

...

2

步骤二:将带阴影的场景渲染到缓冲中

...

4

步骤三:对屏幕缓冲进行模糊

...

7

步骤四:渲染带阴影的场景

...

11

参考文献

...

13

最初,动态阴影技术只有在有限的几种情况下才能实现。但是,随着强大的可编程图形硬件的面世,动态阴影技术已经完全取代了以前的如

light map

这样的静态阴影技术及像

projected shadows

这样的半动态阴影技术。目前两种流行的动态阴影技术分别是

shadow volumes

和

shadow mapping

。

shadow volumes

技术是一种基于几何形体的技术,它需要几何体在一定方向的灯光下的轮廓去产生一个封闭的容积,然后通过光线的投射就可以决定场景的阴影部分(常常使用模板缓冲去模拟光线的投射)。这项技术是像素精确的,不会产生任何的锯齿现象,但是与其他的技术一样,它也有缺点。最主要的两个问题一是极度依赖几何形体,二是需要非常高的填充率。由于这些缺点,使得

shadow mapping

技术渐渐地变得更为流行起来。

阴影映射技术是一种图像空间的技术,它首先在以光源位置作为视点的情况下渲染整个场景的深度信息,然后再使用这些深度信息去决定场景的哪一部分是处于阴影之中。虽然这项技术有许多优点,但它有锯齿现象并且依赖

z-

缓冲技术。不过它的优点足以抵消它的这些缺点,因此本文选用了这项技术。

硬阴影破坏了场景的真实性,因此,我们必须仿造软阴影来提升场景的可视效果。许多狂热的学者都拿出了描述软阴影技术的论文。但实际上,这些技术大部分都是很难在一个较为复杂的场景下实现实时效果。直到我们拥有了能克服这些技术局限性的硬件后,我们才真正的采用了这些方法。

本文采用了基于图像空间的方法,并利用

shadow mapping

技术来产生软阴影。这个方法不能产生完美的阴影,因为没有真正的模拟出本影和半影,但它不仅仅可以解决阴影映射技术的锯齿现象,还能以赏心悦目的软阴影来提升场景的可视效果。

首先,我们生成阴影映射图(

shadow map

),具体方法是以光源位置为视点,将场景的深度信息渲染到浮点格式的缓冲中去。然后我们不是像通常那样在阴影下渲染场景,而是将阴影区域渲染到一幅屏幕大小的缓冲中去,这样就可以使用

bloom filter

进行模糊并将它投射回屏幕空间中使其显示在屏幕上。是不是很简单?

本文只处理了聚光灯源这种情况,但可以很方便的推广到点光源上。

下面是具体步骤:

通过将深度信息写入浮点纹理的方法产生阴影映射图(

shadow map

)。

深度比较后将场景的阴影部分渲染到定点纹理,此时不要任何的灯光。

使用

bloom filter

模糊上一步的纹理,本文采用了

separable Gaussian filter

,

也可用其他的方法。

在所有的光源下将上一步模糊后的纹理投射到屏幕空间中,从而得到最终的效果。

步骤

一

:渲染阴影映射图(

shadow map

)

首先,我们需要创建一个能保存屏幕深度信息的纹理。因为要把这幅纹理作为

render target

,所以我们还要创建一个表面(

surface

)来保存纹理的表面信息。由于深度信息值的范围很大因此这幅纹理必须是浮点类型的。

R32F

的格式有足够的精度可以满足我们的需要。下面是创建纹理的代码片断:

if

(

FAILED( g_pd3dDevice->CreateTexture( SHADOW_MAP_SIZE,

SHADOW_MAP_SIZE, 1, D3DUSAGE_RENDERTARGET,

D3DFMT_R32F, D3DPOOL_DEFAULT, &g_pShadowMap,

NULL ) ) )

{

MessageBox( g_hWnd, "Unable to create shadow map!",

"Error", MB_OK | MB_ICONERROR );

return

E_FAIL;

}

g_pShadowMap->GetSurfaceLevel( 0, &g_pShadowSurf );

为了完成阴影映射图,我们要把场景的深度信息渲染到阴影映射图中。为此在光源的世界

-

视点

-

投影变换矩阵(

world-view-projection matrix

)下渲染整个场景。下面是构造这些矩阵的代码:

D3DXMatrixLookAtLH(

&matView, &vLightPos, &vLightAim, &g_vUp );

D3DXMatrixPerspectiveFovLH(

&matProj, D3DXToRadian(30.0f),

1.0f

, 1.0f, 1024.0f );

//

实际上作者在例程中使用的是

D3DXMatrixOrthoLH( &matProj, 45.0f, 45.0f, 1.0f, //1024.0f )

。这个函数所构造的

project

矩阵与

D3DXMatrixPerspectiveFovLH

()构造的

//

不同之处在于:它没有透视效果。即物体的大小与视点和物体的距离没有关系。显然例

//

程中模拟的是平行光源(

direction light

),而这里模拟的是聚光灯源(

spot light

不知翻译得对不对?)

matLightViewProj

= matWorld * matView * matProj;

下面是渲染场景深度的顶点渲染和像素渲染的代码:

struct

VSOUTPUT_SHADOW

{

float4

vPosition : POSITION;

float

fDepth

: TEXCOORD0;

};

VSOUTPUT_SHADOW VS_Shadow(float4 inPosition : POSITION )

{

VSOUTPUT_SHADOW OUT = (VSOUTPUT_SHADOW)0;

OUT.vPosition = mul( inPosition, g_matLightViewProj );

OUT.fDepth = OUT.vPosition.z;

return

OUT;

}

这里我们将顶点的位置与变换矩阵相乘,并将变换后的

z

值作为深度。在像素渲染中将深度值以颜色(

color

)的方式输出。

float4

PS

_Shadow( VSOUTPUT_SHADOW IN ) : COLOR0

{

return

float4( IN.fDepth, IN.fDepth, IN.fDepth, 1.0f );

}

瞧,我们完成了阴影映射图,下面就是以颜色方式输出的阴影映射图,深蓝色部分表明较小的深度值,浅蓝色部分表明较大的深度值。

下面,我们要把场景的带阴影的部分渲染到并不立即显示的缓冲中,使我们可以进行模糊处理,然后再将它投射回屏幕。首先把场景的阴影部分渲染到一幅屏幕大小的定点纹理中。

if

(

FAILED( g_pd3dDevice->CreateTexture( SCREEN_WIDTH,

SCREEN_HEIGHT, 1, D3DUSAGE_RENDERTARGET,

D3DFMT_A8R8G8B8, D3DPOOL_DEFAULT,

&g_pScreenMap, NULL ) ) )

{

MessageBox( g_hWnd, "Unable to create screen map!",

"Error", MB_OK | MB_ICONERROR );

return

E_FAIL;

}

g_pScreenMap->GetSurfaceLevel( 0, & g_pScreenSurf );

为了获得投影纹理坐标(

projective texture coordinates

),我们需要一个纹理矩阵,作用是把投影空间(

projection space

)中的位置变换到纹理空间(

texture space

)中去。

float

fTexOffs = 0.5 + (0.5 / (float)SHADOW_MAP_SIZE);

D3DXMATRIX matTexAdj(0.5f, 0.0f, 0.0f, 0.0f,

0.0f, -0.5f, 0.0f, 0.0f,

0.0f, 0.0f, 1.0f, 0.0f,

fTexOffs, fTexOffs, 0.0f, 1.0f );

//

这个矩阵是把

projection space

中范围为

[-1

,

1]

的

x,y

坐标值转换到纹理空间中

//[0

,

1]

的范围中去。注意

y

轴的方向改变了。那个

(0.5 / (float)SHADOW_MAP_SIZE)

//

的值有什么作用我还不清楚,原文也没有说明。

matTexture

= matLightViewProj * matTexAdj;

我们像往常那样通过深度的比较来获得阴影因数,但随后并不是像平常那样输出整个照亮了的场景,我们只输出阴影因数。下面的顶点渲染和像素渲染完成这个工作。

struct

VSOUTPUT_UNLIT

{

float4

vPosition : POSITION;

float4

vTexCoord : TEXCOORD0;

float

fDepth

: TEXCOORD1;

};

VSOUTPUT_UNLIT VS_Unlit(float4 inPosition : POSITION )

{

VSOUTPUT_UNLIT OUT = (VSOUTPUT_UNLIT)0;

OUT.vPosition = mul( inPosition, g_matWorldViewProj );

OUT.vTexCoord = mul( inPosition, g_matTexture );

OUT.fDepth = mul( inPosition, g_matLightViewProj ).z;

return

OUT;

}

我们采用

percentage closer filtering (PCF)

来平滑锯齿边缘。为了完成“

PCF

”,我们简单的对周围

8

个纹理点进行采样,并取得它们深度比较的平均值。

tex2Dproj

()函数以及转换到纹理空间的向量(x,y,z,w)对纹理进//行采样。这与d3d9sdk中shadowmap例子用tex2D()及向量(x,y)进行采样不同。具体//区别及原因很容易从程序中看出,我就不再啰嗦了。

float4

PS

_Unlit( VSOUTPUT_UNLIT IN ) : COLOR0

{

float4

vTexCoords[9];

float

fTexelSize = 1.0f / 1024.0f;

VTexCoords[0] = IN.vTexCoord;

vTexCoords[1] = IN.vTexCoord + float4( -fTexelSize, 0.0f, 0.0f, 0.0f );

vTexCoords[2] = IN.vTexCoord + float4( fTexelSize, 0.0f, 0.0f, 0.0f );

vTexCoords[3] = IN.vTexCoord + float4( 0.0f, -fTexelSize, 0.0f, 0.0f );

vTexCoords[6] = IN.vTexCoord + float4( 0.0f, fTexelSize, 0.0f, 0.0f );

vTexCoords[4] = IN.vTexCoord + float4( -fTexelSize, -fTexelSize, 0.0f, 0.0f );

vTexCoords[5] = IN.vTexCoord + float4( fTexelSize, -fTexelSize, 0.0f, 0.0f );

vTexCoords[7] = IN.vTexCoord + float4( -fTexelSize, fTexelSize, 0.0f, 0.0f );

vTexCoords[8] = IN.vTexCoord + float4( fTexelSize, fTexelSize, 0.0f, 0.0f );

float

fShadowTerms[9];

float

fShadowTerm = 0.0f;

for(

int i = 0; i < 9; i++ )

{

float

A = tex2Dproj( ShadowSampler, vTexCoords[i] ).r;

float

B = (IN.fDepth - 0.1f);

fShadowTerms[i] = A < B ? 0.0f :1.0f;

fShadowTerm

+= fShadowTerms[i];

}

fShadowTerm /= 9.0f;

return

fShadowTerm;

}

屏幕缓冲完成了,我们还需要进行模糊工作。

我们采用

seperable gaussian filter

模糊屏幕缓冲。但我们也可以用

Poisson filter

。这次的

render targets

是

A8R8G8B8

的纹理和相关的表面。我们需要两个

render targets

,一个进行水平阶段,一个进行垂直阶段。

for

(

int i = 0; i < 2; i++ )

{

if( FAILED( g_pd3dDevice->CreateTexture( SCREEN_WIDTH,

SCREEN_HEIGHT, 1, D3DUSAGE_RENDERTARGET,

D3DFMT_A8R8G8B8, D3DPOOL_DEFAULT,

&g_pBlurMap[i], NULL ) ) )

{

MessageBox( g_hWnd, "Unable to create blur map!",

"Error", MB_OK | MB_ICONERROR );

return

E_FAIL;

}

g_pBlurMap[i]->GetSurfaceLevel( 0, & g_pBlurSurf[i] );

}

我们用下面的代码生成

15

个高斯偏移量(

Gaussian offsets

)及他们的权重(

corresponding weights

)。

float

GetGaussianDistribution( float x, float y, float rho )

{

float

g = 1.0f / sqrt( 2.0f * 3.141592654f * rho * rho );

return

g * exp( -(x * x + y * y) / (2 * rho * rho) );

}

void

GetGaussianOffsets( bool bHorizontal,

D3DXVECTOR2 vViewportTexelSize,

D3DXVECTOR2* vSampleOffsets,

float

* fSampleWeights )

{

fSampleWeights[0] = 1.0f * GetGaussianDistribution( 0, 0, 2.0f );

vSampleOffsets[0] = D3DXVECTOR2( 0.0f, 0.0f );

if( bHorizontal )

{

for(

int i = 1; i < 15; i += 2 )

{

vSampleOffsets[i + 0] = D3DXVECTOR2( i * vViewportTexelSize.x, 0.0f );

vSampleOffsets[i + 1] = D3DXVECTOR2( -i * vViewportTexelSize.x, 0.0f );

fSampleWeights[i + 0] = 2.0f * GetGaussianDistribution( float(i + 0), 0.0f, 3.0f );

fSampleWeights[i + 1] = 2.0f * GetGaussianDistribution( float(i + 1), 0.0f, 3.0f );

}

}

else

{

for(

int i = 1; i < 15; i += 2 )

{

vSampleOffsets[i + 0] = D3DXVECTOR2( 0.0f, i * vViewportTexelSize.y );

vSampleOffsets[i + 1] = D3DXVECTOR2( 0.0f, -i * vViewportTexelSize.y );

fSampleWeights[i + 0] = 2.0f * GetGaussianDistribution( 0.0f, float(i + 0), 3.0f );

fSampleWeights[i + 1] = 2.0f * GetGaussianDistribution( 0.0f, float(i + 1), 3.0f );

}

}

}

为了模糊屏幕缓冲,我们将模糊映射图(

blur map

)作为

render target

,使用下面的顶点渲染和像素渲染代码渲染一个与屏幕等大的方块。

//

作者在程序中预先定义的屏幕大小是1024 * 768,而随后定义的与屏幕等大的方块为:

//

pVertices[0].p = D3DXVECTOR4( 0.0f, 0.0f, 0.0f, 1.0f );

// pVertices[1].p = D3DXVECTOR4( 0.0f, 768 / 2, 0.0f, 1.0f );

// pVertices[2].p = D3DXVECTOR4( 1024 / 2, 0.0f, 0.0f, 1.0f );

// pVertices[3].p = D3DXVECTOR4( 1024 / 2, 768 / 2, 0.0f, 1.0f );

//

这种方法与d3dsdk中HDRLight中获得render target

的

width and height

然后再构造的

//

方法不同

:

// svQuad[0].p = D3DXVECTOR4(-0.5f, -0.5f, 0.5f, 1.0f);

// svQuad[1].p = D3DXVECTOR4(Width-0.5f, -0.5f, 0.5f, 1.0f);

// svQuad[2].p = D3DXVECTOR4(-0.5f, Height-0.5f, 0.5f, 1.0f);

// svQuad[3].p = D3DXVECTOR4(Width-0.5f,fHeight-0.5f, 0.5f, 1.0f);

//

而一般定义的窗口大小往往与从render target获得的width and height不相同。

//

而二者的fvf都是D3DFVF_XYZRHW。这两种方法有什么区别我一直没想通。

struct

VSOUTPUT_BLUR

{

float4

vPosition : POSITION;

float2

vTexCoord : TEXCOORD0;

};

VSOUTPUT_BLUR VS_Blur(float4 inPosition : POSITION, float2 inTexCoord : TEXCOORD0 )

{

VSOUTPUT_BLUR OUT = (VSOUTPUT_BLUR)0;

OUT.vPosition = inPosition;

OUT.vTexCoord = inTexCoord;

return

OUT;

}

float4

PS_BlurH( VSOUTPUT_BLUR IN ): COLOR0

{

float4

vAccum = float4( 0.0f, 0.0f, 0.0f, 0.0f );

for(

int i = 0; i < 15; i++ )

{

vAccum += tex2D( ScreenSampler, IN.vTexCoord + g_vSampleOffsets[i] ) * g_fSampleWeights[i];

}

return

vAccum;

}

float4

PS_BlurV( VSOUTPUT_BLUR IN ): COLOR0

{

float4

vAccum = float4( 0.0f, 0.0f, 0.0f, 0.0f );

for(

int i = 0; i < 15; i++ )

{

vAccum += tex2D( BlurHSampler, IN.vTexCoord + g_vSampleOffsets[i] ) * g_fSampleWeights[i];

}

return

vAccum;

}

这里,模糊映射图已经完成了,为了增加阴影的模糊程度,增加了纹理上点的采样距离。最后一步自然是将模糊后的纹理图投射回屏幕空间使其显示在屏幕上。

After first Gaussian pass

After second Gaussian pass

为了将模糊后的纹理投射到屏幕上,我们像平常那样渲染场景,但投影模糊后的纹理时要使用屏幕空间的坐标。我们使用裁剪空间的坐标和一些数学方法来产生屏幕空间的坐标。下面的顶点渲染和像素渲染将完成这个工作:

struct

VSOUTPUT_SCENE

{

float4

vPosition : POSITION;

float2

vTexCoord : TEXCOORD0;

float4

vProjCoord : TEXCOORD1;

float4

vScreenCoord : TEXCOORD2;

float3

vNormal : TEXCOORD3;

float3

vLightVec : TEXCOORD4;

float3

vEyeVec : TEXCOORD5;

};

VSOUTPUT_SCENE VS_Scene(float4 inPosition : POSITION,

float3

inNormal : NORMAL,

float2

inTexCoord : TEXCOORD0 )

{

VSOUTPUT_SCENE OUT = (VSOUTPUT_SCENE)0;

OUT.vPosition = mul( inPosition, g_matWorldViewProj );

OUT.vTexCoord = inTexCoord;

OUT.vProjCoord = mul( inPosition, g_matTexture );

裁剪空间的坐标转换到屏幕空间的坐标,方法和

裁剪空间的坐标转换

//

纹理空间的坐标的方法很相似。

OUT.vScreenCoord.x = ( OUT.vPosition.x * 0.5 + OUT.vPosition.w * 0.5 );

OUT.vScreenCoord.y = ( OUT.vPosition.w * 0.5 - OUT.vPosition.y * 0.5 );

OUT.vScreenCoord.z = OUT.vPosition.w;

OUT.vScreenCoord.w = OUT.vPosition.w;

float4 vWorldPos = mul( inPosition, g_matWorld );

OUT.vNormal = mul( inNormal, g_matWorldIT );

OUT.vLightVec = g_vLightPos.xyz - vWorldPos.xyz;

OUT.vEyeVec = g_vEyePos.xyz - vWorldPos.xyz;

return

OUT;

}

float4

PS_Scene( VSOUTPUT_SCENE IN ) : COLOR0

{

IN.vNormal = normalize( IN.vNormal );

IN.vLightVec = normalize( IN.vLightVec );

IN.vEyeVec = normalize( IN.vEyeVec );

float4

vColor = tex2D( ColorSampler, IN.vTexCoord );

float

ambient = 0.0f;

float

diffuse = max( dot( IN.vNormal, IN.vLightVec ), 0 );

float

specular = pow(max(dot( 2 * dot( IN.vNormal, IN.vLightVec ) * IN.vNormal

- IN.vLightVec, IN.vEyeVec ), 0 ), 8 );

if( diffuse == 0 ) specular = 0;

float

fShadowTerm = tex2Dproj( BlurVSampler, IN.vScreenCoord );

float

fSpotTerm = tex2Dproj( SpotSampler, IN.vProjCoord );

return

(ambient * vColor) +

(diffuse * vColor * g_vLightColor * fShadowTerm * fSpotTerm) +

(specular * vColor * g_vLightColor.a * fShadowTerm * fSpotTerm);

}

终于完成了。看上去不错。该技术的优点一是解决了锯齿问题,二是在多光源,低内存下实现了软阴影。另外该技术与阴影生成方法无关,可以很容易的在

shadow volumes

技术中采用这项技术。缺点是由于进行了模糊处理而需要一些填充率。

下面是不同阶段的效果比较图: